Compression bomb for self-defense

Something I wanted to do for a very long time but never did so far, was to serve compression bombs to malevolent web clients browsing my blog. If it works it could be worse considering it for all the websites/services I host.

Recently there have been a lot of people complaining about having their servers overloaded by various bots but mostly bots scrapping data for training AI. And some of those bots don’t respect rules set in the robots.txt file.

And obviously, attackers, scanning websites for vulnerabilities to exploit, will not follow the rules in robots.txt.

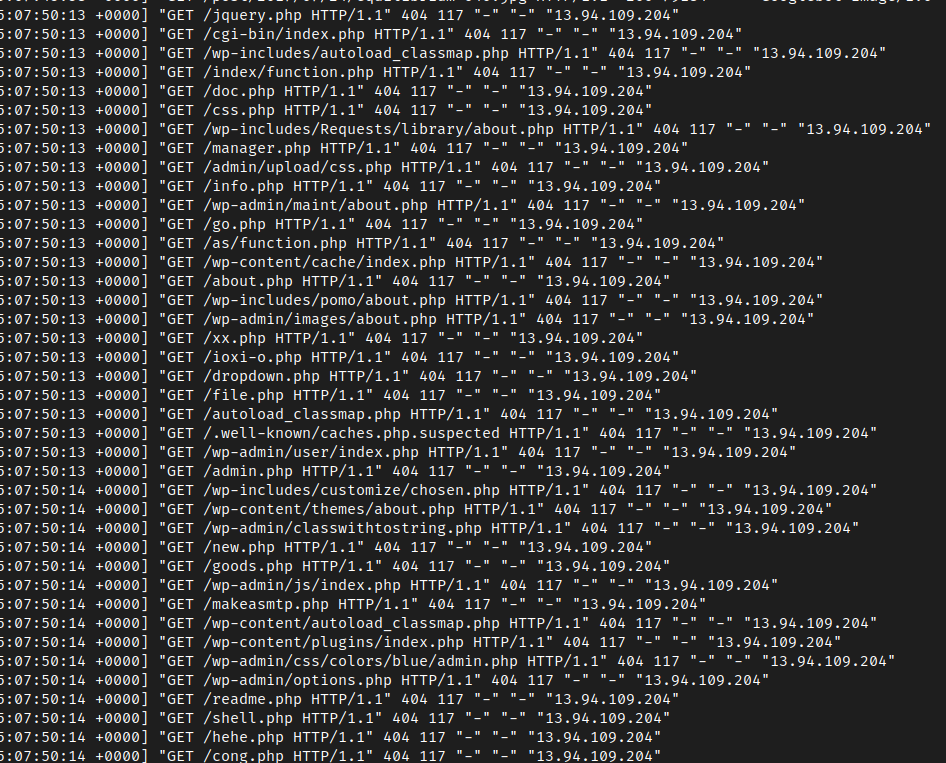

Here is an example of a malevolent bot scanning my blog (coming from Microsoft’s network…):

What to do?

One thing that can be done is to slow down the misbehaving bots so it will take them longer to scan, increasing their costs, leaving slightly more time for everyone to patch their vulnerable services.

There are several ways to do that, one common technique is called tarpitting. The idea is to make the service answer requests very slowly (possibly when it has been identified as malevolent). But, depending on how it’s done, it also has a cost on the server side (more connections kept open, etc.). Cloudflare has an interesting labyrinth method for that.

Another solution is to send an answer that will be hard to process by the malevolent client. In this case a compression bomb can be used.

Compression bomb

What is it?

A compression bomb works on a protocol that is compressed. The idea is that the client sends a request to the server, the server sends a relatively small response in a compressed format that will expand to a very large response for the client. If the client has not been programmed or configured to handle that, the response may crash the client.

Can it be used on the web?

For the web, the HTTP protocol allows several compression formats. For instance, when connecting to a website, my web browser sends the following header:

Accept-Encoding: gzip, deflate, br, zstd

Stating that it supports: gzip, deflate, brotli and zstandard.

To which my server will reply with a Content-Encoding header specifying in which of those format the response is compressed with.

So, as long as the client supports a compression format the server can answer with, a compression bomb can be sent.

How to create one?

Creating a compression bomb file is quite easy (but maybe not the most effective way):

dd if=/dev/zero bs=1G count=10 | gzip -c > bomb.gz

dd if=/dev/zero bs=1G count=10 | brotli -c > bomb.br

dd if=/dev/zero bs=1G count=10 | zstd -c > bomb.zst

This takes a 10 GiB file (full of zeros) and compress it to:

- a 10 MiB gzip file (that’s still quite heavy);

- an 8.3 KiB brotli file (that’s quite an impressive compression ratio);

- a 329 KiB zstandard file.

So, a client receiving one of those files will have to decompress a 10 GiB file, hopefully using a bit of CPU and saturating the memory.

Unfortunately, the most supported format is gzip, but it also has the worst compression ratio.

Note: the file contains only the null character, and it used to not be a valid character in HTML (not sure if it’s still the case), so this could be easily detected. An alternative would be to send a file containing spaces only:

yes ' ' | head -c 10G | gzip -c > bomb.gz # ~30 MB

yes ' ' | head -c 10G | brotli -c > bomb.br # 8.3 KiB

yes ' ' | head -c 10G | zstd -c > bomb.zst # ~11 MB

Except for brotli, the size of the compressed files is way bigger 🫤.

Implementation

There are several ways to implement it:

- a PHP script that returns the bomb when a known bad User-Agent is detected;

- a middleware sending the bomb to blacklisted and malicious IP addresses;

In my case, my blog is static, it contains only files, no code is executed (apart from the web server itself of course). In a previous blog post I explained how the content could be pre-compressed, so no need to compress HTML (or CSS, or JS…) on the fly when serving them to clients.

There are two use cases for which I want to return a compression bomb:

- malevolent scanners;

- bad bots not following

robots.txtrules.

Bad bots

I want to send the compression bomb when the bots are accessing a file they should not access.

Let’s start with defining a forbidden admin directory in the websites robots.txt:

# Disallow admin folder for all (this will be used to put a compression bomb in).

User-agent: *

Disallow: /admin/

The robots.txt file should probably be deployed a few days before going further, to make sure there are no bots already scanning the website that are not aware of the forbidden directory.

In the admin folder, add the compression bombs renamed as index.html (so bomb.gz becomes index.html.gz).

Add an empty admin/index.html file so nginx won’t always return a 404.

Now the page needs to be found by the bad bots, so I added a link to it in the footer template (using Hugo templates):

<a class="hidden" href="{{ .Site.BaseURL }}admin/index.html" rel="nofollow">Do not click</a>

And a bit of CSS to hide it:

.hidden {

display: none;

}

So, the link is hidden, has a nofollow directive and is excluded in robots.txt.

I can’t make it more explicit to bots that they MUST NOT go there.

Scanners

For vulnerabilities scanners, it’s just a matter of reading the logs, see which URLs are the most commonly accessed, and add a redirect for those URLs to the compression bomb. Given that my blog does not have any PHP pages and that those are the most scanned, redirecting all of those requests to the compression bomb should be quite efficient.

My blog is currently using nginx, which unfortunately supports only gzip for pre-compression. At some point I should investigate serving my blog with something else, like static-web-server. Snippet to add in the server configuration:

location ~ \.php$ {

if ($http_accept_encoding !~ gzip) {

return 404;

}

try_files /admin/index.html =404;

}

For all php files, if the client supports gzip compression, return the compression bomb.

Check

First let’s check if it works on the admin page:

| |

- Line 8: the response being returned is compressed with

gzip. - Line 9: the content is 10 MiB long, matching he size of the

index.html.gzfile. - Line 21: the response is abnormally slow.

- Line 23: the actual file size is in fact 10 GiB.

On the server’s log I can see: "GET /admin/ HTTP/1.1" 200 10420385 "-" "Wget/1.21.3", proving the 10 MiB file has been sent.

So now we know that bots ignoring the robots.txt file and scrapping the admin will be served with a compression bomb.

Now, let’s check the redirect on PHP files:

$ wget https://blog.desgrange.net/foo.php

Resolving blog.desgrange.net (blog.desgrange.net)...

Connecting to blog.desgrange.net (blog.desgrange.net)||:443... connected.

HTTP request sent, awaiting response... 404 Not Found

2025-05-03 18:38:17 ERROR 404: Not Found.

$ wget --compression=auto -S https://blog.desgrange.net/foo.php

Resolving blog.desgrange.net (blog.desgrange.net)...

Connecting to blog.desgrange.net (blog.desgrange.net)||:443... connected.

HTTP request sent, awaiting response...

HTTP/1.1 200 OK

Accept-Ranges: bytes

Cache-Control: max-age=604800

Content-Encoding: gzip

Content-Length: 10420385

Content-Type: text/html

Etag: "6813e18f-9f00a1"

Referrer-Policy: no-referrer-when-downgrade

Server: nginx

Strict-Transport-Security: max-age=63072000; includeSubDomains; preload

X-Content-Type-Options: nosniff

X-Frame-Options: DENY

X-Xss-Protection: 1; mode=block

Length: 10420385 (9,9M) [text/html]

Saving to: ‘foo.php’

foo.php 100%[====================================================================================================================>] 9,94M 252KB/s in 41s

(251 KB/s) - ‘foo.php’ saved [10737418240]

Fetching a php page without compression returns a 404, fetching it with compression returns the compression bomb.

Same trace is visible in the server’s logs: "GET /foo.php HTTP/1.1" 200 10420385 "-" "Wget/1.21.3" and the downloaded file is again 10 GiB long.

Conclusion

After a few days, filtering the server’s log file for admin or php (grep -e admin -e php) shows that the compression bomb has been fetched for both cases (scanners and bad bots).

For scanners, there was usually a lot of calls made within a few seconds coming from a single IP address at a time (see screenshot above).

Now, most of those cases seem to be doing only one call, for example: "GET /wp-content/plugins/hellopress/wp_filemanager.php HTTP/1.1" 200 5856692 "-" "-" "52.169.78.48" showing that about 5 MiB of the bomb was downloaded (instead of the full 10 MiB).

I’m wondering if it’s what the client downloaded before giving up or detecting the trap or crashing.

Some cases look good: several non-PHP URLs fetched, my server returned a 404 as usual, then a fetch on a PHP URL, and that’s it, the scan ended. That really sounds like the compression bomb crashed the scanner.

But in other cases… there is almost no difference, the full 10 MiB of the compression bomb is sent but the scanner continues as usual, downloading the bomb several times in fact. Although it looked a bit slower than previous scans I have seen, and the bomb was not always fully downloaded. My only hope in this case is that it increased the attacker’s infrastructure cost.

The main downside of this technique is that the gzipped file sent is still quite big, gzip as a max compression ratio of about 1000:1, and can be problematic if you pay by the bandwidth used.

As Cloudflare stated in their blog post, once attackers detect this kind of tricks, they might adjust their techniques to avoid being trapped, and we will be back to the original situation. At least I had some fun doing this 😉.